"Devfest Cloud 2023"의 두 판 사이의 차이

라이언의 꿀팁백과

| (같은 사용자의 중간 판 4개는 보이지 않습니다) | |||

| 30번째 줄: | 30번째 줄: | ||

* 행사 홈페이지 https://festa.io/events/4385 | * 행사 홈페이지 https://festa.io/events/4385 | ||

* Google Developer Groups https://developers.google.com/community/gdg | * Google Developer Groups https://developers.google.com/community/gdg | ||

= Build LLM-powered apps with embeddings, vector search and RAG = | |||

스피커 : Kaz Sato (Staff Developer Advocate (ML) @ Google Cloud (Tokyo) | |||

<u>Vertex AI Embeddings API + Vector Search - Grounding LLMs made easy</u> | |||

Integrating LLMs with existing IT systems without hallucination is crucial in business. LLMs are just probability language model; that's why there could be hallucination in their answers. | |||

RAG는 LLM 과 Vertex AI 를 활용해서 할루시네이션을 없앰 | |||

'''What is Embeddings?''' | |||

Embeddings are translating data into vectors. AI builds an <u>embedding space</u> as <u>a map of meaning</u>. | |||

An '''embedding''' is a relatively low-dimensional space into which you can translate high-dimensional vectors. Embeddings make it easier to do machine learning on large inputs like sparse vectors representing words. Ideally, an embedding captures some of the semantics of the input by placing semantically similar inputs close together in the embedding space. An embedding can be learned and reused across models. | |||

'''Vectex AI Embeddings API for Text''' | |||

By calling the APIs provided by Google you can easily utilize embedding technologies. | |||

'''ScaNN''' The vector search service for Google Searchv | |||

== RAG == | |||

Retrieval Augmented Generation is used to beat hallucination. by using '''vector search''' to support LLM. | |||

== VLM == | |||

LLMs with vision change businesses. | |||

== Reference == | |||

* https://ai-demos.dev/ | |||

* https://github.com/GoogleCloudPlatform/vertex-ai-samples/tree/main | |||

* https://developers.google.com/machine-learning/crash-course/embeddings/video-lecture | |||

* https://atlas.nomic.ai/map/vertex-stack-overflow | |||

= Your guide to tuning foundation models = | |||

스피커 : Erwin Huizenga (Staff Developer Advocate, Machine Learning Tech Lead) | |||

파운데이션 모델은 사전에 엄청나게 많은 양의 데이터를 학습한 AI 모델을 의미 | |||

== Why tuning? == | |||

원하는 목적에 최적화된 결과를 얻기 위해서. '''Take-specific tuning can make LMMs more reliable.''' | |||

== Limitations of prompt design == | |||

* Small changes in wording or word order can impact model results | |||

* Inconsistent results | |||

* Lots of trial and error | |||

* Long prompts take up context window | |||

It's non-deterministic! | |||

Vertex AI tuning services | |||

Here, we only cover two ways of tunings: | |||

# Supervised Fine Tuning | |||

# Reinforcement Learning from Human Feedback | |||

== Tuning foundation models == | |||

'''Full Fine Tuning''' : update <u>all</u> model weights | |||

'''Adapter Tuning''' : update a <u>small set</u> of weights | |||

== Adapter tuning == | |||

* Train a small subset of parameters | |||

* Parameters might be a subset of the existing model parameters or an entirely new set of parameters | |||

=== Training benefits of adapter tuning === | |||

* Fewer parameters to update | |||

* Faster training | |||

* Reduction in memory usage | |||

* <u>Less training data needed</u> | |||

=== Serving benefits of Adapter Tuning === | |||

* Shared deployment of a foundation model | |||

** 파운데이션 모델에 바꿔가면서 최적화 한 weights 을 손쉽게 적용 가능 | |||

* Augment with adapter weights specific to a particular task at runtime | |||

== Supervised Fine Tuning == | |||

Tuning with labeled dataset in the format: | |||

'''{prompt, expected output}''' | |||

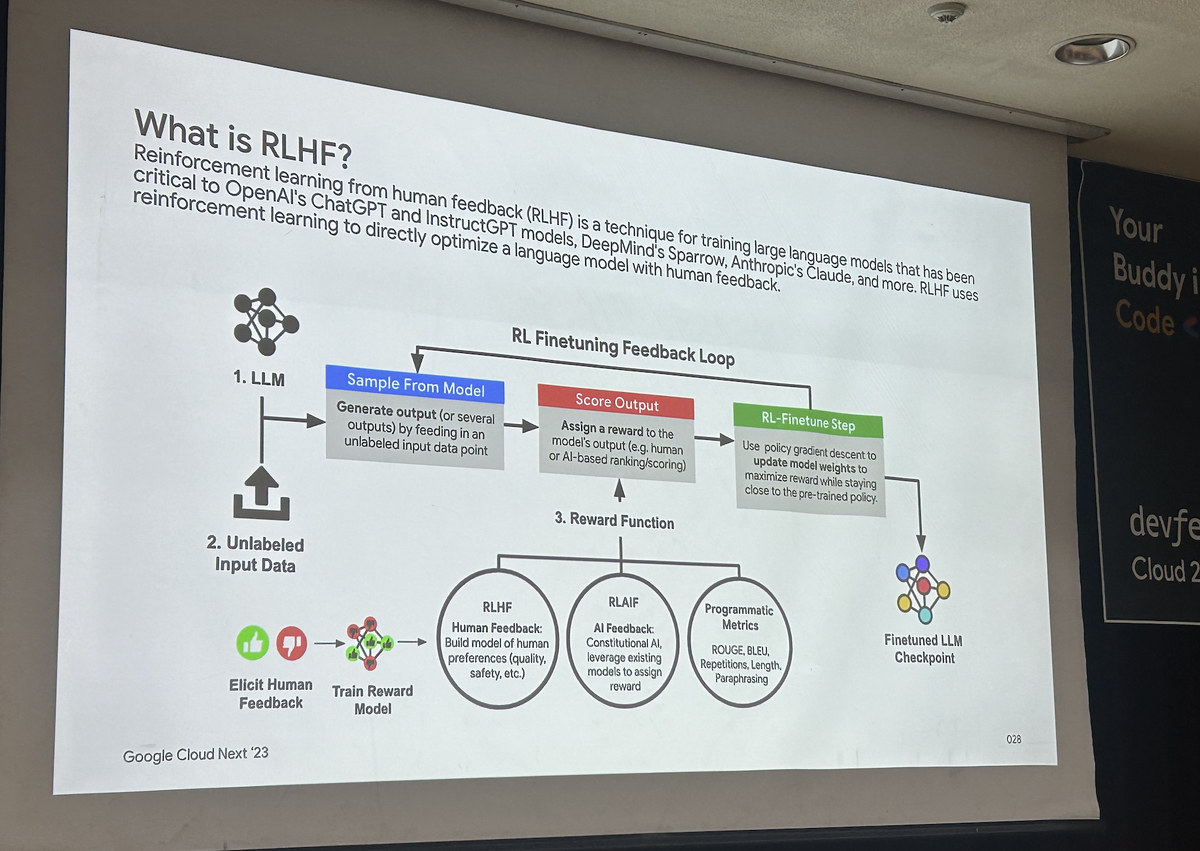

== RLHF == | |||

When the expected output is difficult to define | |||

이거 두 개 읽어라 | |||

* https://arxiv.org/abs/1706.03741 | |||

* https://arxiv.org/abs/2203.02155 | |||

[[파일:RLHF-devfest-cloud-2023.png|가운데|프레임없음|1200x1200픽셀]] | |||

== Gemini == | |||

Google's first foundation model that's built from the ground up to be multimodal. | |||

* Gemini Ultra | |||

* Gemini Pro | |||

* Gemini Nano | |||

== References == | |||

* https://cloud.google.com/vertex-ai/ | |||

* https://cloud.google.com/blog/topics/google-cloud-next/welcome-to-google-cloud-next-23 | |||

* https://blog.google.com/technology/ai/google-gemini-ai/ | |||

*https://developers.googleblog.com/2023/12/how-its-made-gemini-multimodal-prompting.html | |||

*https://blog.google/technology/ai/google-gemini-ai/ | |||

= Open Source Large-scale Streaming Analytics Using Apache Beam = | |||

Speaker : Matthias Baetens (Singapore GDE, Senior Cloud Architect @ DoiT) | |||

Keywords : Apache Beam, Google Cloud Dataflow | |||

== Technical intro == | |||

Scaling up has limitation; it can't make it bigger forever. That's why scaling out comes up. Distribution technology has been used. | |||

Stream processing makes it possible to gather data more frequently. | |||

'''Event time''' : the time something happened | |||

'''Processing time''' : the time the event is processed (always some time later than its event time) | |||

Stream processing : <u>Windowing</u> | |||

To handle the stream data, we need to set a boundary where the data can be handled at the same time. | |||

Stream processing : <u>Triggering</u> | |||

Trade-offs: | |||

# Completeness | |||

# Latency | |||

# Cost $$$ | |||

=== Apahe Beam === | |||

* Java, Python, Go supports | |||

=== Google Cloud Dataflow === | |||

* Fully managed data processing solution | |||

* Autoscaling of resources | |||

* Optimisation (graph, dynamic work rebalancing,...) | |||

* Monitoring and logging | |||

== Use cases == | |||

* Dynamic pricing Lyft rides using Apache Beam | |||

* Feature Generation at LinkedIn | |||

* Quantifying counterparty credit risk | |||

* Large scale streaming analytics using Apache Beam & Cloud Cloud Dataflow | |||

== Demo == | |||

https://bit.ly/matthias-beam-demo | |||

== References == | |||

* https://beam.apache.org/ | |||

* https://cloud.google.com/customers/hsbc-risk-advisory-tool | |||

* https://www.oreilly.com/radar/the-world-beyond-batch-streaming-101/ | |||

* https://www.oreilly.com/radar/the-world-beyond-batch-streaming-102/ | |||

= ChatGPT 슬랙 연동의 모든 것 = | |||

발표자 : 백재연, Google Cloud GDE | |||

키워드 : Cloud Run 또는 Cloud Function(요청이 들어올 때만 처리하는 Serverless Product), Google Cloud Memorystore | |||

Slack은 3초 안에 응답을 해야하기 때문에, 일단 응답을 하고 ChatGPT 로 보내는 요청에 대한 응답은 Background 에서 실행해야 한다. | |||

Slack에서 응답을 Stream으로 할지 안할지 결정해야 함 | |||

OpenAI 에서 제공하는 ChatCompletion.create 메소드에서 stream=True 를 하면 ChatGPT 응답이 Stream 으로 온다. | |||

운영시스템이라면 중간에 트래픽을 제어하는 '''스로틀링 서버'''를 통해 Slack 과 ChatGPT 통신이 안정적으로 처리되도록 해야함 | |||

terraform init | |||

terraform plan | |||

terraform apply | |||

서버리스 제품은 "Cold Start" 라는 용어가 항상 사용된다. 요청을 받은 후, 서버 및 앱이 실행되기 까지의 시간을 말한다. 이러한 cold start를 줄이기 위해서는... | |||

# 컴퓨팅 스케일업 | |||

# lazy initialization of global variables | |||

# warm 상태 유지 | |||

== References == | |||

* https://jybaek.tistory.com/ | |||

2023년 12월 10일 (일) 10:59 기준 최신판

1 <키노트> Building Your Tech Career with Developer Communities[편집 | 원본 편집]

스피커 : 개발자 생태계(DEVREL 데브렐) 팀 by Kristine Song (구글 8년차, 3번째 팀이고 이전에는 HR에 있었음)

1.1 Why communities matter?[편집 | 원본 편집]

- Learn

- Connect

- Inspire

최근에는 GitHub, Stackoverflow, 커뮤니티 기여 등 다양한 측면을 살펴봐서 채용을 진행함. 얼마나 배우려고 하는 열정이 있는지 등을 온라인 활동 등을 통해 살펴봄

1.2 Building your tech career - Growth opportunities[편집 | 원본 편집]

low hanging magic fruits : 튜토리얼 만들기, 오픈소스 기여, 행사 발표 등

high hanging magic fruits : 스타트업 창업 등

low hanging common fruits : 커뮤니티 참여, 책 읽기 등

많은 노력이 드는 데 효과가 없는 일은 할 필요 X. 여힝이나 가라.

Google Developer Experts 가 국내에 13명 있음

1.3 레퍼런스[편집 | 원본 편집]

- 행사 홈페이지 https://festa.io/events/4385

- Google Developer Groups https://developers.google.com/community/gdg

2 Build LLM-powered apps with embeddings, vector search and RAG[편집 | 원본 편집]

스피커 : Kaz Sato (Staff Developer Advocate (ML) @ Google Cloud (Tokyo)

Vertex AI Embeddings API + Vector Search - Grounding LLMs made easy

Integrating LLMs with existing IT systems without hallucination is crucial in business. LLMs are just probability language model; that's why there could be hallucination in their answers.

RAG는 LLM 과 Vertex AI 를 활용해서 할루시네이션을 없앰

What is Embeddings?

Embeddings are translating data into vectors. AI builds an embedding space as a map of meaning.

An embedding is a relatively low-dimensional space into which you can translate high-dimensional vectors. Embeddings make it easier to do machine learning on large inputs like sparse vectors representing words. Ideally, an embedding captures some of the semantics of the input by placing semantically similar inputs close together in the embedding space. An embedding can be learned and reused across models.

Vectex AI Embeddings API for Text

By calling the APIs provided by Google you can easily utilize embedding technologies.

ScaNN The vector search service for Google Searchv

2.1 RAG[편집 | 원본 편집]

Retrieval Augmented Generation is used to beat hallucination. by using vector search to support LLM.

2.2 VLM[편집 | 원본 편집]

LLMs with vision change businesses.

2.3 Reference[편집 | 원본 편집]

- https://ai-demos.dev/

- https://github.com/GoogleCloudPlatform/vertex-ai-samples/tree/main

- https://developers.google.com/machine-learning/crash-course/embeddings/video-lecture

- https://atlas.nomic.ai/map/vertex-stack-overflow

3 Your guide to tuning foundation models[편집 | 원본 편집]

스피커 : Erwin Huizenga (Staff Developer Advocate, Machine Learning Tech Lead)

파운데이션 모델은 사전에 엄청나게 많은 양의 데이터를 학습한 AI 모델을 의미

3.1 Why tuning?[편집 | 원본 편집]

원하는 목적에 최적화된 결과를 얻기 위해서. Take-specific tuning can make LMMs more reliable.

3.2 Limitations of prompt design[편집 | 원본 편집]

- Small changes in wording or word order can impact model results

- Inconsistent results

- Lots of trial and error

- Long prompts take up context window

It's non-deterministic!

Vertex AI tuning services

Here, we only cover two ways of tunings:

- Supervised Fine Tuning

- Reinforcement Learning from Human Feedback

3.3 Tuning foundation models[편집 | 원본 편집]

Full Fine Tuning : update all model weights

Adapter Tuning : update a small set of weights

3.4 Adapter tuning[편집 | 원본 편집]

- Train a small subset of parameters

- Parameters might be a subset of the existing model parameters or an entirely new set of parameters

3.4.1 Training benefits of adapter tuning[편집 | 원본 편집]

- Fewer parameters to update

- Faster training

- Reduction in memory usage

- Less training data needed

3.4.2 Serving benefits of Adapter Tuning[편집 | 원본 편집]

- Shared deployment of a foundation model

- 파운데이션 모델에 바꿔가면서 최적화 한 weights 을 손쉽게 적용 가능

- Augment with adapter weights specific to a particular task at runtime

3.5 Supervised Fine Tuning[편집 | 원본 편집]

Tuning with labeled dataset in the format:

{prompt, expected output}

3.6 RLHF[편집 | 원본 편집]

When the expected output is difficult to define

이거 두 개 읽어라

3.7 Gemini[편집 | 원본 편집]

Google's first foundation model that's built from the ground up to be multimodal.

- Gemini Ultra

- Gemini Pro

- Gemini Nano

3.8 References[편집 | 원본 편집]

- https://cloud.google.com/vertex-ai/

- https://cloud.google.com/blog/topics/google-cloud-next/welcome-to-google-cloud-next-23

- https://blog.google.com/technology/ai/google-gemini-ai/

- https://developers.googleblog.com/2023/12/how-its-made-gemini-multimodal-prompting.html

- https://blog.google/technology/ai/google-gemini-ai/

4 Open Source Large-scale Streaming Analytics Using Apache Beam[편집 | 원본 편집]

Speaker : Matthias Baetens (Singapore GDE, Senior Cloud Architect @ DoiT)

Keywords : Apache Beam, Google Cloud Dataflow

4.1 Technical intro[편집 | 원본 편집]

Scaling up has limitation; it can't make it bigger forever. That's why scaling out comes up. Distribution technology has been used.

Stream processing makes it possible to gather data more frequently.

Event time : the time something happened

Processing time : the time the event is processed (always some time later than its event time)

Stream processing : Windowing

To handle the stream data, we need to set a boundary where the data can be handled at the same time.

Stream processing : Triggering

Trade-offs:

- Completeness

- Latency

- Cost $$$

4.1.1 Apahe Beam[편집 | 원본 편집]

- Java, Python, Go supports

4.1.2 Google Cloud Dataflow[편집 | 원본 편집]

- Fully managed data processing solution

- Autoscaling of resources

- Optimisation (graph, dynamic work rebalancing,...)

- Monitoring and logging

4.2 Use cases[편집 | 원본 편집]

- Dynamic pricing Lyft rides using Apache Beam

- Feature Generation at LinkedIn

- Quantifying counterparty credit risk

- Large scale streaming analytics using Apache Beam & Cloud Cloud Dataflow

4.3 Demo[편집 | 원본 편집]

https://bit.ly/matthias-beam-demo

4.4 References[편집 | 원본 편집]

- https://beam.apache.org/

- https://cloud.google.com/customers/hsbc-risk-advisory-tool

- https://www.oreilly.com/radar/the-world-beyond-batch-streaming-101/

- https://www.oreilly.com/radar/the-world-beyond-batch-streaming-102/

5 ChatGPT 슬랙 연동의 모든 것[편집 | 원본 편집]

발표자 : 백재연, Google Cloud GDE

키워드 : Cloud Run 또는 Cloud Function(요청이 들어올 때만 처리하는 Serverless Product), Google Cloud Memorystore

Slack은 3초 안에 응답을 해야하기 때문에, 일단 응답을 하고 ChatGPT 로 보내는 요청에 대한 응답은 Background 에서 실행해야 한다.

Slack에서 응답을 Stream으로 할지 안할지 결정해야 함

OpenAI 에서 제공하는 ChatCompletion.create 메소드에서 stream=True 를 하면 ChatGPT 응답이 Stream 으로 온다.

운영시스템이라면 중간에 트래픽을 제어하는 스로틀링 서버를 통해 Slack 과 ChatGPT 통신이 안정적으로 처리되도록 해야함

terraform init

terraform plan

terraform apply

서버리스 제품은 "Cold Start" 라는 용어가 항상 사용된다. 요청을 받은 후, 서버 및 앱이 실행되기 까지의 시간을 말한다. 이러한 cold start를 줄이기 위해서는...

- 컴퓨팅 스케일업

- lazy initialization of global variables

- warm 상태 유지