VLA

라이언의 꿀팁백과

Ryanyang (토론 | 기여)님의 2026년 2월 15일 (일) 20:53 판 (새 문서: Vision Language Action Model. In robot learning, a '''vision-language-action model (VLA)''' is a class of multimodal foundation models that integrates vision, language and actions. Given an input image (or video) of the robot's surroundings and a text instruction, a VLA directly outputs low-level robot actions that can be executed to accomplish the requested task. (Source: https://en.wikipedia.org/wiki/Vision-language-action_model) 파일:General architecture of a vision-lang...)

Ryanyang (토론 | 기여)님의 2026년 2월 15일 (일) 20:53 판 (새 문서: Vision Language Action Model. In robot learning, a '''vision-language-action model (VLA)''' is a class of multimodal foundation models that integrates vision, language and actions. Given an input image (or video) of the robot's surroundings and a text instruction, a VLA directly outputs low-level robot actions that can be executed to accomplish the requested task. (Source: https://en.wikipedia.org/wiki/Vision-language-action_model) 파일:General architecture of a vision-lang...)

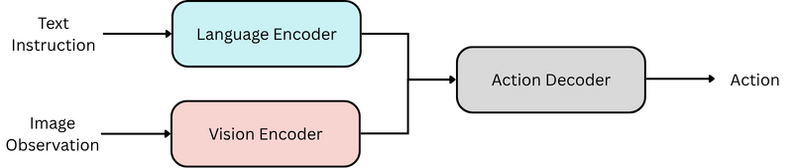

Vision Language Action Model. In robot learning, a vision-language-action model (VLA) is a class of multimodal foundation models that integrates vision, language and actions. Given an input image (or video) of the robot's surroundings and a text instruction, a VLA directly outputs low-level robot actions that can be executed to accomplish the requested task. (Source: https://en.wikipedia.org/wiki/Vision-language-action_model)

VLA 모델(Vision-Language-Action Model)은 텍스트, 비디오, 시연(Demonstration)등의 인풋을 받아 액션을 생성하는 로봇 파운데이션 모델들을 일컫는다. 즉, 인공지능 로봇에 들어가는 일종의 생성형 인공지능이다. (출처: https://namu.wiki/w/VLA)